A lot of what we interpret from our astronomical telescopes is either through images/videos or raw data rendered understandable through physical plots in real or Fourier spaces. But what if we can render that data to cater to the other human senses? In this blog article I discuss very briefly the mechanics of data sonification as I understand it.

I recently came across the Audio Universe mission (https://www.audiouniverse.org/home) which aims to collect tools in order to support a plethora of target audience ranging from scientists, outreach managers, educators, students and the general masses to represent data as sound. In addition to the research potential of sonification, what particularly caught my eye was it’s potential to make astronomy, or any field essentially, more accessible and inclusive to people with vision-related disabilities and other Special Educational Needs and Disabilities (SEND).

I will give a brief run-through about the general pipeline that goes into the process of sonification. This can be easily done through python with readily accessible data.

- Load essential libraries: Pandas, Numpy, and Pickle for reading, manipulating, processing and storing data. MIDIUtil (https://github.com/MarkCWirt/MIDIUtil) is python library that allows one to write multi -track Musical Instrument Digital Interface (MIDI) files, basically allowing us to write musical notes onto numerical data. And finally, Audiolazy (https://pypi.org/project/audiolazy/), which is a Digital Signal Processing package with a bunch of features for different mode of audio processing of a digital signal. We particularly use the str2midi functions which converts the notes input as strings into a midi accessible data type representing a musical note.

- Load data of astrophysical phenomena observed, normalize the data and finally initialize the note set for mapping. One can also use a few octaves of a specific scale.

- Set minimum and maximum velocities of the notes. Astrophysical processes often last from hours to days and that’s why we need to compress time for sonification (unless one wants to have a days long track :)). We set this through setting days/hours per beat (as per the process), then set the beats per minute, total duration of track as desired.

- Make list of MIDI numbers of chosen notes for mapping through the str2midi function from Audiolazy. Map the y-axis data (say flux) to MIDI notes and velocity and save the MIDI file using functions ftom the MIDIUtil libraries.

- Finally, one can use an audio editing software, such as Garageband, for calibrating and tuning the output midi file.

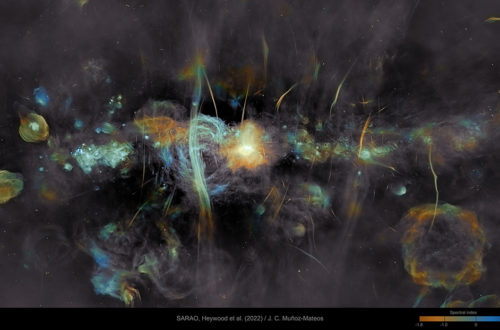

There also exists this code run-through with Matt Russo which covers most of what’s discussed above if one wants to give this a try themselves with their datasets. Also, to close out this article, here is a sonification of M87* from NASA’s Marshall Space Flight Center’s youtube channel (X-ray (Chandra): NASA/CXC/SAO; Optical (Hubble): NASA/ESA/STScI; Radio (ALMA): ESO/NAOJ/NRAO; Sonification: NASA/CXC/SAO/K.Arcand, SYSTEM Sounds (M. Russo, A. Santaguida)).